Introduction

In real-world scenarios, the applications grow. They grow in terms of data volume, users and so on. With growing demands we need to scale the resources up and down – if you are on the cloud, using cloud migration services can solve the problem. The resources are like CPU, storage, memory and so on. Apart from this there are some scenarios where scaling above resources does not solve the problem. We encountered such an incident in one of the project. This article talks about the problem and the solution implemented.

Problem Statement or Case study

For one of our client who had outsourced software development to Cuelogic, we were using the sidekiq for background jobs processing. The size of our queues change drastically during the daytime. When it happens, we increase or decrease our workers by adding or removing EC2 instances automatically depending upon the queue size.

Previously we had only one API server instance to process background jobs (Sidekiq integrated). This single API server instance takes 20 minutes to process 30000 jobs. We want more concurrency in less time.

Possible Solution / Evaluations

We had started multiple processes of Sidekiq on single API Server but it’s consumed more resources and not achieved the concurrency which we were looking for.

So we thought about the AWS Autoscaling and we have created Autoscaling Group, Launch Configuration and SNS to send notifications to our API Server.

We have used AWS CodeDeploy for auto deployment of applications to instances and to update the applications as required.

With scaling we are looking to process the same number of jobs in less than 5 minutes. For this we will need to have multiple instances of API server i.e. horizontally scaling the API server with multiple Sidekiq processes( depends upon CPU cores).

So we decided that scale up or down autoscale instances depending upon sidekiq busy and enqueued jobs at the end we want process more jobs in less time.

Each job uses a database connection , so we have also consider the number of database connections/ second.

Considering this limitation we can launch at max 5 instances for API server, with each instance consuming maximum of 1000 database connections. The 500 connections are kept reserved for web users (dashboard users).

Scaling strategy

Scaling strategy will be dynamic, means we won’t be having five API server instances running all the time. We need to launch those as concurrent jobs (adding prospects) starts increasing. During peak business hour we can have more than 10000 job requests while for other time it could be less than 1000. It makes little sense to have around 5 API server running for this much less number.

Stopping the API server instances

By default at least one API server will be always running. As the queue size starts to shrink, the extra instances will be stopped. E.g. if initially there are more than 30000 running and there are 5 API server instance are running, after some of the jobs from the queue gets processed the queue size become, let’s say, 22000 then out of 5 running server instances one API server instance will be stopped and only 4 will be running. Similarly if number of jobs in queue gets down to less than 15000 then only 2 API server instances will be running all others will be stopped. And finally there are less than 5000 jobs in queue then only one API server instance will be running.

Note: Whenever autoscale instances terminating we have to stop sidekiq gracefully because if Sidkeiq processes on terminating instances contains some jobs in the busy queue that will be lost, so we have to take care of this. I will explain how to terminate sidekiq gracefully in next section of this document.

Auto scale Sidekiq workers on Amazon EC2

We use Sidekiq a lot, from image conversion to shipment tracking numbers generation i.e for background job processing.

The size of our queues change drastically during the day. When it happens, we increase or decrease our workers by adding or removing EC2 instances automatically.

We implemented the above mentioned approach in following way:

Queue Size Metric

To trigger your Scale Up/Down policies based on the Queue Size, we have created on class which will check the sidekiq queue size

class AwsMetrics::QueueSizeMetric def initialize

@client = AWS::AutoScaling::Client.new(region: AWS_REGION,

access_key_id: AWS_ACCESS_KEY_ID,

secret_access_key: SECRET_ACCESS_KEY

)

end

def launch_or_terminate_autoscale_group_instance

size = SidekiqProDetail.get_api_queued_size

if size <= 5000

desired_instances = @client.describe_auto_scaling_groups(auto_scaling_group_names: [AUTO_SCALING_GROUP_NAME])[:auto_scaling_groups].first[:desired_capacity]

@client.update_auto_scaling_group({

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME, desired_capacity: 1

}) if desired_instances >

elsif size > 5000 && size <= 10000

@client.update_auto_scaling_group({

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME, desired_capacity: 2

})

elsif size > 10000 && size <= 15000

@client.update_auto_scaling_group({

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME, desired_capacity: 3

})

elsif size > 15000 && size <= 20000

@client.update_auto_scaling_group({

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME, desired_capacity: 4

})

elsif size > 20000

@client.update_auto_scaling_group({

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME, desired_capacity: 5

})

end

end

end

Keep the metrics updated

We have set the cron job to check the queue size every 5 minutes.

Terminating autoscale instances:

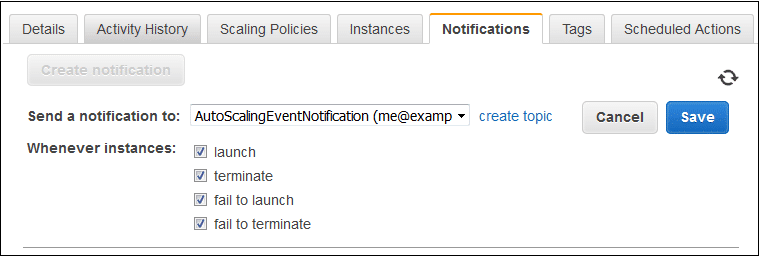

When you use Auto Scaling to scale your applications automatically, it is useful to know when Auto Scaling is launching or terminating the EC2 instances in your Auto Scaling group. Amazon SNS coordinates and manages the delivery or sending of notifications to subscribing clients or endpoints. You can configure Auto Scaling to send an SNS notification whenever your Auto Scaling group scales up or down.

In our case we have configured our Auto Scaling group to use the autoscaling: EC2_INSTANCE_TERMINATE notification type, and your Auto Scaling group terminates an instance, it sends an email notification. This email contains the details of the terminated instance, such as the instance ID and the reason that the instance was terminated.

The following link explains how to create a SNS:

http://docs.aws.amazon.com/autoscaling/latest/userguide/ASGettingNotifications.html

Configure Your Auto Scaling Group to Send Notifications

- Open the Amazon EC2 console at https://console.aws.amazon.com/ec2/.

- On the navigation pane, under Auto Scaling, choose Auto Scaling Group

- Select your Auto Scaling group.

- On the Notifications tab, choose Create notification.

- On the Create notifications pane, do the following:a.For Send a notification to:, select your SNS topic.

b.For Whenever instances, select the events to send the notifications for, in our case we select terminate option.

c. Choose Save.

Whenever autoscale instances terminating we have to stop Sidekiq gracefully because if Sidkeiq processes on terminating instances contains some jobs in the busy queue will be lost, so we have to take care of this. I will explain how to terminate Sidekiq gracefully.

Auto Scaling supports sending Amazon SNS notifications when instance launch or terminate events occurs. We used the same method to detect the autoscale instance is terminating and we have to stop Sidekiq gracefully.

We have followed the following method:

1) We have configured SNS for autoscale group for the autoscaling: EC2_INSTANCE_TERMINATE event.

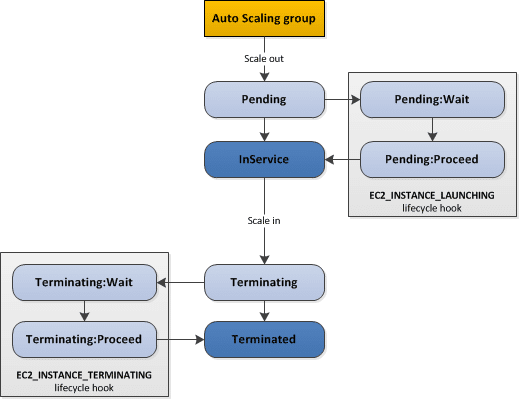

2) We have put the lifecycle hook. A lifecycle hook tells Auto Scaling that you want to perform an action on an instance that is not actively in service; for example, either when the instance launches or before the instance terminates.

When you add a lifecycle hook, you have the option to be notified when the instance enters a wait state so that you can perform the corresponding custom action.

So we put the autoscaling: EC2_INSTANCE_TERMINATE hook.

@client.put_lifecycle_hook

(

auto_scaling_group_name: AUTO_SCALING_GROUP_NAME,

lifecycle_hook_name: Auto-Scale-Ec2-Termination’,

lifecycle_transition: “autoscaling:EC2_INSTANCE_TERMINATING”,

notification_target_arn: ‘arn:aws:sns:us-west-2:496361111699:Auto-Scale-EC2-Termination’,

role_arn: ‘arn:aws:iam::492361111699:role/sns-publish’)

The following illustration shows the transitions between instance states in this process:

Please refer following links:

http://docs.aws.amazon.com/autoscaling/latest/userguide/lifecycle-hooks.html

http://docs.aws.amazon.com/cli/latest/reference/autoscaling/put-lifecycle-hook.html

- When a Autoscale instance is terminating, it will send an SNS notification to our API server. The notification sends JSON response which contains Instance Id, LifecycleActionToken etc. When the server got the notification we do the following the steps from code:

a. We find the IP address from instance id.

b. We login to server using ssh.c. Then we send USR1 signal to sidekiq. The USR1 command tells to sidekiq don’t accept new jobs, so sidekiq will not accept any new jobs from the enqueued jobs.For example:ps -ef | grep sidekiq | grep -v grep | awk ‘{print $2}’ | xargs kill -USR1Then we will calculate the sum of busy jobs.d. If the the sum is greater than 0 then we are again following step c and d until the sum becomes 0. When the busy jobs sum becomes 0 then we will call complete lifecycle action hook of autoscale group, it means that Auto Scaling can continue terminating the instance. Now the instance will terminate.

Conclusion:

We have achieved more concurrency in less time by auto scaling Sidekiq instances as per our queue size.

Referred Links: